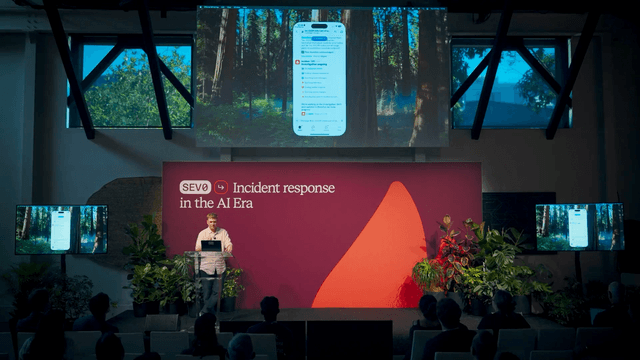

incident.io Product Showcase

Pete Hamilton, CTO, Lawrence Jones, AI Engineer, and Ed Dean, AI Product Manager runs through our new AI SRE product. AI SRE shortens time to resolution by automating investigation, root cause analysis, and a fix, all before you’ve even opened your laptop.

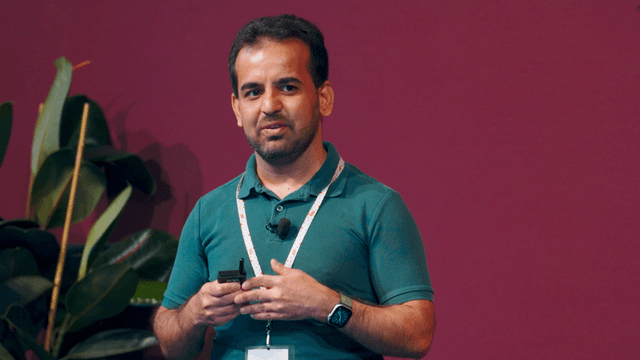

Pete Hamilton, Co-founder and CTO, incident.io

Pete Hamilton, Co-founder and CTO, incident.io Lawrence Jones, Principal Engineer, incident.io

Lawrence Jones, Principal Engineer, incident.io Ed Dean, Product Manager, AI, incident.io

Ed Dean, Product Manager, AI, incident.io

The transcript below has been generated using AI and may not fully match the audio.

Pete: I am Pete, CTO, co-founder of incident.io. It's great to be back at SEV0. I was here very fleetingly last time. My daughter had just been born, so I managed to see some of you. But it was literally fly in, fly out, turnaround. It's great to be here and be able to spend the full day, especially 'cause we have some absolutely amazing things to show you from the product team.So onward. Speaking of the product team, this is them behind me. Obviously I get to stand up on the stage with a couple of them, these are the folks that put in all the hard work and what you're about to see. And for such a small team, I'm consistently blown away by the amount that they can get done.It has literally been for the last couple of weeks, almost 24/7. But regardless, I think really impressive work. And some of 'em have flown over from London as well. That's where a lot of our engineers are. And you can go talk to them in the break to see this demo in action, up close.Before we do that though, I want to, wind back the clock as it were, and talk about some of the early days at incident.io, and take us on a bit of a journey to where we are today. Steven touched on some of this earlier. I spent a lot of my career on call, as have Steven and Chris, and the experience that we had was that coordination, communication.These were the main problems that, we'd experienced. And so naturally, that's where we started when we kicked off incident.io and that grew into the product now known as response focused on, better coordination, communication, automation, and I think it was a huge step up, single source of truth.All your incidents in one place. And since then, over 170,000 people have solved nearly a half million incidents with incident.io. And I think that's pretty cool for one product. But naturally, customers always want more, as you do very rightfully. And one of the integrations we had with status pages got a lot of pull for, what if you took the same craft and quality you've put into your main product and you built a status page product? And we said, you know what?You guys are right. We should do that. And after putting that together a few years in, we're now at the point where some of the most well known brands in the world trust us with their customer comms. You see a few up there, OpenAI, Square, many others, many of them in this room. And we are looking at 600 million visits a year to status pages hosted on incident.io, coming up on a billion, probably over the next six to 12 months, which is pretty cool again for product number two.Then comes product number three, not content with status pages and response. The next most popular feature request we have ever had is, please, for the love of God, can you build a better paging product? And again, we felt the same way. We really wanted to do this. This was one that we pushed a little bit further back and we wanted to get right.And so we waited until sort of March, 2024 to do this. We launched it. Since then, we're about 18 months later now, 72% of the customers on our platform have moved over to incident.io Oncall, both enterprises, commercial smaller customers, and 90% of the paging that happens for anyone using incident.io happens directly through our Oncall mobile app, which I think is pretty cool.The final thing I wanna touch on, and this is very relevant to some of the stuff you're about to see, is, as Steven showed earlier, we have some foundational capabilities as well. So you're thinking workflows, insights, reporting, integrations and catalog, which I've highlighted here. And the real goal of catalog is, to do two things.One, be a really powerful way to bring third party data into incident.io. Up until the point we had Catalog, we were pretty much just all about incident data. Catalog was the first time that we'd brought in CRM data. We'd brought in support data, and we started to weave that directly through the platform so that you could automate, when we get paged, look up the ID in my customer database and figure out who this customer success manager is, pull them into the channel automatically.Suddenly workflows get a massive upgrade. And this is particularly relevant for a second reason, which is if you're gonna go and invest heavily in AI and systems that can dig into how bad is this incident, this is the exact kind of data that you need in order to be able to do a good job of that. As Steven touched on earlier, there are really two reasons that we've done all of this.If you look over the whole product suite as it stands today, one is to help you run a great process. I think, if I was to score us, I think we've done a pretty good job of this, I think, hundreds of thousands of users and. almost half a million incidents would suggest that is the case.The second is to help you fix the problem faster. And as Steven mentioned this, I think, we've helped in a way, having a more coordinated incident, having better comms, it does help you fix it faster, but it always felt we, like we were one step removed from the messy stuff, the fiddly, like what is actually wrong?What is actually happening in our logs and telemetry? We were very reliant on people telling us that and then us helping them do the rest of the way. And so this is the thing that we've always had our eye on. It's really been a thing where we didn't feel we were in a position to go after it.Sure. Machine learning, ai, et cetera. But until recently, with the rise of things like LLMs, we don't really have the technology to go do this stuff really well. I think all of that has changed in the last year or two, and what we're about to show you is, how can you, how you can take LLMs. Leverage all the context that we already hold, that positions us really well to go after this second part of the problem space.And if you do both really well, what does that look like in an end-to-end incident response platform? So the first thing that we are gonna show you today, and there's gonna be three, is gonna be AI SRE. This is our investigative agent — that's a mouthful — that works to find and fix incidents better, right alongside you.As with all things ai right now, the devil is in the details, I think, right? We've all seen enough kind of demos, videos, things where it's trust me, it works. You can't come touch it. Don't ask me questions. and, perfect conditions I think don't make for a good conference. Also, Steven just outright said you have to do it live, to which my response was that I won't tell you what my response to Steven was at that point, but, He repeated it so much that we've ended up doing it live. So whether this goes brilliantly or terribly, it's all on him. and we're gonna throw caution to the wind and we're gonna do all of it live. So my bit's gonna be live and Ed's gonna do some and then come back to me at the end. It comes with a risk, but I think it's, I think it's worth it.See by my face at the end whether or not I agree with what I've just said. Onwards — enough talk, let's go break some stuff in production. So.I'm using the incident.io mobile app. So in a few moments I'll probably get a page. It's a real one. This is our production account, literally with incident.io. And I'm expecting in a minute to hear one of the dulcet tones from The Chainsmokers. Huh? Oh, sorry.that was well timed. Cool. You guys can't actually see the notification 'cause of how the mirroring works, but let me jump into the mobile app. Anyway, the first thing you can see is inside incident.io I have the opportunity to acknowledge I'm gonna do this. We've all done this millions of times, and I'm gonna jump straight into the Slack channel and see what's going on.The first thing you'll notice is that AI SRE is already at work on this incident. It's just like I normally would analyze the error. It's looking at recent changes, pull requests, it's jumping into dashboards, it's checking to see if there's anything relevant mentioned in Slack. And just like I would be, it's going, I know I've seen this somewhere before.When have I already solved this? and I am 10, 15 years into running incidents at this point. Even on my best day, like with all the tools available, a coffee in my hands, fresh brain, first thing in the morning. I can't look at 10 tabs at the same time. I can't log into five systems at once.I can't wrangle tools while doing something else. And I can't certainly process, like, thousands of data points, right? I'm just a, I'm just a person. In comparison, AI SRE can do all of that and you can see it happening live on stage right now. This is real, by the way — so fingers crossed.It's using everything it learns to come up with the best guess of what's happening. And normally what I would do at this point is probably jump straight into the desktop, just to get a slightly better view of what's going on. So let's do that. We've split this incident into a separate channel, just so you don't have to look at our production account.And if I jump in, you can see, AI SRE sort of continues plugging away here now. One thing to call out is that what's happening here — these individual checks that you see happening, little like nice spinners — these are not one-shot prompts. These are not a deterministic series of steps. My team didn't write these.They've essentially built the agents. They told them how to work together, and the rest is all non-deterministic from AI SRE. Behind what you're seeing here is a bunch of multi-agent systems analyzing huge amounts of information — thousands of pull requests, Slack messages, the works — and they're then coming back together in a kind of scatter-gather mode.With another agent going what do you know? What have you found? What have you found? Can we pull all that into a view on what's happening in the incident? And you can see that's exactly what's happened here. which even at this point, I think is pretty cool. And I think the team deserve a round of applause for getting here.So it has given me a first pass. And you can see it'll keep investigating as more information comes in. It's given a first pass on what's going on, what's caused it, and a couple of next steps here. And all the screens have just gone black. I didn't expect that bit to be the thing that broke. But thank you for keeping me on my toes.Okay, let's carry on. The nice thing about this is you get incredibly bespoke observations and suggestions. So rather than this just being a playbook where you chuck some stuff into ChatGPT and it gives you some run-of-the-mill output... Do I need to pause, guys?Hello?Yeah, spoken like someone who's been at many conferences. Thank you, Molly. So you get incredibly bespoke insights as a result of this, right? This isn't just lob some context into an LLM. You're gonna get things that only my organization will know that would only be relevant to us.It's gonna tell me you should run the script that Martha ran last month for a similar incident because it looks like it's gonna be the same root cause and I think it might fix it. It's gonna do things like suggest we pull in Lisa, because last time when, this particular thing came up, she got pulled in too late, and as a result, the incident was 10 times as worse.It's gonna do things like say, last time I saw error rates this bad for this service, it resulted in a multi-day, million-dollar outage. So you might wanna get a status page up and running right now. These are the kind of unique insights that you just can't achieve by taking the exception from Sentry and lobbing it at one of the many fantastic frontier models.Although obviously we do use all of that under the hood — OpenAI, Anthropic and others — to power many of the experiences that you're seeing here. The key thing I wanna call out is those complicated, incredibly unique situations. Those are the things that we've set out to solve with AI SRE. The goal here is not simple code fixes get solved quickly.The goal here is the really hard to unpick stuff that engineers wouldn't know automatically at 2:00 AM they're the things we want to accelerate. So you can see here, not only does it want to tell me what it thinks is going on. Wherever we can. We're gonna do our best to help you resolve things as fast as possible.And for a certain set of changes, and in this case, it's figured out that there's a sort of code change that might be relevant. Lemme just get rid of this random bit of flack. it can actually put together a pull request for me. So it's gonna ask me, you saw some of this in the video earlier, it's gonna ask me, do you want me to put together a pull request to help solve the problem?this being a live demo, we are obviously going to say yes. and we're gonna see what happens. At this point, I'm gonna do a couple of things while it chugs away. So this is the kind of stuff that I would do. In reality for, starters, it's obviously figured out what the bug is. So let's say, can you explain the bug in more detail?Also probably, and by the way, this is just, I forgot to even introduce this. This is just me and autopilot. you can talk directly to it. it's not just an ambient agent. I can ask questions, I can interact with it uses all the same AI platform under the hood. Next thing I wanna ask, I'm gonna ask it.Can you give me a better idea of the impact? Why did I make this so long on traffic in the past 15 minutes, for example? So I'll kick that off as well. In the meantime, let's go check out how that bug is getting on. Cool. So it's actually figured out that there's a really nuanced, edge case off by one error in the code that's being caused by a bad payload.That's kinda cool. Can you explain it like I'm five? I call this CTO mode.Okay. I'll give it two seconds to, to come back to me with something there.In fairness, it's not wrong. that's actually pretty good. I can see it is drafted, the pull request here, which is pretty epic. and we're gonna go take a look at that in just a second. but before we do, let's just take a look at the telemetry that it found. so yeah, this all tracks for what it's worth, it's 10 to 20% error rate on just chucking bad payloads at it.And I can see the request rate has dropped a little bit and the error rate has massively spiked. So, useful graphs — we'll come back to those a little bit later. But let's go look at this. We have a pull request. I think that's pretty cool. In the grand scheme of things, I can see it's written out, this is what I found, this is what I think will fix it.And more importantly, it has actually done that. So you saw it was talking about the off by one error and some of the maths. It's figured out that this is the suspicious bit of code, it's written tests, which is much more than I've done at some points in my career. and in a minute this will be a passing PR that hopefully be able to go on its way to production.For now, I'm gonna flip back to Slack and a couple of things that I would do at this point, probably in addition. Let's do a couple of additional checks that I think incident.io is uniquely placed to do here. So I'm gonna ask it: let's do, can you check Catalog for any upstream dependencies, if I can remember how to spell dependencies.Cool. At this point, it's going to use the information that it has already collected from the code agent earlier on. It's built its knowledge base as we've gone and it's gonna use the Catalog to figure out, given this is the code, which features depend on this bit of the codebase. Then it's gonna say, which teams own that feature?And also are there any other features that depend on that feature, and it's spidering out in this web — like you would as an engineer — figuring out what are the potential knock-on impacts and blast radius here. And if I jump into here, I can see Smart Correlation depends on the Alert Insights feature and the Applied AI team.Cool. At this point, probably sensible to get them in the room, given that I've just made their day a bit miserable. So I'm gonna say, can you page them please? I think I've broken... oh, there's stuff. Pretty standard CTO move at this point. incident.io is gonna go all the way through that chain of reasoning that we just talked about.And it's gonna figure out for the team that owns it who's on call right now, and then it's gonna page them. You can see here, I haven't, that was like, I didn't touch anything. It's just done it. And it's paged Rory, whose phone will probably go off somewhere in the audience. I dunno actually where he sat at this point.And in a minute, there we go. Rory's acknowledged and he's on his way. And so incident.io kept me up to date the entire way through. I can see helpers on the way. Yes, I am demoing this right now — if you could handle the PR, that'd be great. I only have two hands. And so at this point, with Rory kind of figuring stuff out and taking over and actually fixing the problems and taking them out to production, I wanna pause and reflect on everything we've just seen.'Cause it's been quite a rapid-fire demo. The first thing is, rather than getting paged with a cryptic error message, figuring out a plan myself, logging into 20 different systems and putting together a picture, and then sequentially evaluating one hypothesis at a time — AI SRE was on the problem right away.It's checked a ton of different tools and integrations, pulled together thousands of data points and come up with an initial hypothesis way quicker than I probably could have. We were probably talking, if you replay it like it was maybe two minutes. It feels like about three hours when you're on the stage, but I think it's about that.Rather than going off to figure out who I need, looking into a third party system to go which teams own what, and then going into a different system, to go, which are the teams oncall schedules, and then go, who's oncall right now? Page them, come back. Remember that I forgot to tell them what Slack channel I'm working in.Instead, I just told incident.io I want whoever's on call for any upstreams of this. And it went and did that magically and automatically. And finally, AI SRE literally identified the bug. I didn't tell it to. There's no flow charts here. It just went, this looks a bit suss. It's probably code. It pulled out some relevant findings — things in Slack, things in GitHub — and it narrowed down what the issue was and then it fixed it.And all I had to do was say, yes, please. And at this point, to me this is magic. This is the power of an incident response platform end to end. And what happens when you weave all of these products together and then you apply really meaningful and well designed AI on top. And I think at this point, with a bunch of, ah, okay, it's just done it great.usually at this point there's enough information and a Rory Types bunch in the channel, incident.io will pick up on the fact that a lot of things have changed and it'll say, Hey, it's probably time for an update. and we won't demo all of these features, but there's a lot of this Coordination, communication kind of advice and steering built directly into the platform.In this case, you can see it's referenced the telemetry, it's referenced the pull request. It's referenced all the context that it's built up as we've been demoing this. And I'm gonna accept that and share the update at this point. All my stakeholders know what's going on. Brilliant. We can get back to fixing stuff.One thing that I wanna be really clear on is, we've demoed it in Slack. It's just a nice, familiar interface to do it. This is not just Slack, right? This is everything AI SRE has found. It's everything incident.io knows, and it's all attached to the investigation, which means it's available everywhere in the incident.io platform.If we go to the dashboard, everything I've just done, I could be doing in there as well. And, I'm really excited for Ed to show you some of this a little bit later. But first, I imagine a lot of you're looking at this and being like, how on earth does this work under the hood? And is it really as interesting as you say and what's, happening?So rather than hiding all of that away, I'm gonna bring up Lawrence, who's been working on this for the last however many months at this point. And he's gonna give you a bit of a behind-the-scenes look at exactly what AI SRE is doing under the hood, and everything you saw — or everything that makes what you just saw possible. So, Lawrence.Lawrence: Hi. I'm Lawrence and I lead ai incident. so Pete's spoken about the experience of as an incident responder working with ai SRE, but I want to focus on a bit more behind the scenes. So what have we built to power this product and why has it taken us, honestly about 18 months to get ourselves here?Firstly, I think it's worth stating, building a generic incident solver is actually like really quite difficult. and we've, had to build a ton of internal tools that let us see inside the system so that we can actually understand how it's performing and figure out when it goes wrong, how did it go wrong and how can we improve it?So to give you a sense of what this looks like underneath the covers, I've actually recorded one of Pete's many demo rehearsals, in one of our internal investigation dashboards, which is how we as engineers understand how the product is working. we'll walk back through the incident that you just saw, and I'll stop at each stage so that you can see exactly what's happening under the hood.So what you're seeing here is a real video of an admin only view. So something that we use as staff on top of investigations, which shows how AI SRE is building its understanding of the incident as it progresses. So naturally we are starting with the alert. we end up connecting with some of our integration providers.So in this case it's Sentry, and we reach inside of Sentry and we pull the exception into our system and we start analyzing it to understand what's going on. This is emulating the first steps that you as a human responder would take. If you were ever paged in the middle of the night, you probably open Sentry and go, cool, where is this actually happening?What's actually gone wrong? But it's at this point that we've analyzed our alerts, that things start getting a bit more interesting, and we go beyond what a normal human responder might be capable of. So whilst an experienced responder can look at a Sentry exception really quickly, it's what comes after where AI really shines.We're about to start searching thousands of GitHub pull requests. We're going to look through all of the Slack messages from channels that you have connected to the system, and we're gonna search them for anything that might be relevant to the incident using what we saw in the alert to try and find good matches.An exhaustive search like this is just not humanly possible. It's certainly not possible inside a time-pressured incident context when you might be really urgently trying to respond to the problem. And with AI it is possible, but it's not easy. And in fact, you can see on the left, I've taken some of the traces from the investigation checks that we've been running as part of the system, and you can see how we're fanning out all of this work to tens of parallel workers.These workers are then using the indexes that we build continually in our system, having looked at all of your data so that they can quickly find relevant matches, and then they're powering or they're pushing them through a ton of different filtering questions, asking things like, does this PR diff look like it could have caused the thing that this incident is about?And we're using frontier models to do that. So it's not just vectorization and pulling stuff outta a database. We're actually crunching through the numbers and doing some genuine analysis. If done by a human, this might take days, but thankfully, because of AI and all of our investments in search infrastructure, our prompt tuning and background indexing, we're able to find both the PR that caused the issue and relevant Slack messages in just 20 seconds.It's not just external systems, though. I mean you all hear because you know the value of learning from incidents and actually more importantly than these external systems, is looking through all of the historical incidents that we have inside of our platform for clues on how to solve this one. So we're not just searching against incident metadata.This isn't “find me an incident where it came from Sentry.” What we're doing is we're looking for incidents that match on a variety of different incident dimensions. So we're looking at what type of error this is, what systems are impacted, even the time of day that the incident is happening, so that we can find incidents where we've seen problems like this before, so that we can get clues for how to solve this.We're also not just looking at incident metadata either we're, when we do these matches, we're looking through everything that happened in those incidents. So we're looking through everything that happened in the incident channel, so all of the messages that you've sent to your colleagues, we're looking at any of the post-mortems that your responders have offered against those incidents.And we're also looking at any follow-ups — so anything that you may have exported to Jira or to Linear — we're looking at that to try and figure out how you fixed this before. We use those incidents that we've found in this search to build an ephemeral runbook, which tells you how you should approach this incident based on how you've approached incidents of this type before.And it's more than just technical details too. It's about who to escalate to when, and if you need to engage with a regulatory body. All the stuff that normally gets forgotten in the heat of the moment in an incident. And you are learning continually from best practices that evolve over time by looking at the incidents that you responded to most recently.Having now found a bunch of useful data, it's starting to collate it into a report for responders. This is when we move into what we call the first assessment stage of the investigation, and it's at this point that our scheduler ends up splitting and it allows long-lived agents like code and telemetry to continue while we prepare our preliminary findings.We're going to iterate on the analysis continually during the investigation. It's not just a one-time thing. In fact, this runs for the duration of the incident, so it can be many hours or possibly even days. The goal is to get responders the most update understanding of the incident in real time. And we've actually put a lot of effort into trying to get these results to you really fast so that even in critical incidents where every second matters, we are telling responders exactly what they need to know and giving them actionable advice as soon as we know what we should be recommending to them.All of this means that we get the investigation report that comes into the channel just two minutes after the pager fires. Now, thankfully, we've done this analysis, and that is actually before most on-callers get to their laptop. While we've been building our first report, the telemetry and the code agents have been continuing the investigation.We've been broadening our understanding of impact by checking Grafana dashboards and looking at logs, and we've confirmed that the bug exists through analysis of our code base. So that's actually pulling down the code and really genuinely going through the code that is impacted by this bug.This is a multi-agent system, and getting agents to collaborate in real time has honestly been one of the hardest aspects of building this system. Both the code and telemetry agent can stay on task for the duration of the incident, which as I said could even be on the order of days. And as they find new information, they end up communicating it back to the main agent as soon as they find it.And that allows us to update responders in real time as they learn and then contrasting. So it's around now that we see that the code agent concludes, it, understands the problem, it matches our incident, and actually it can probably fix it. So we start planning a fix, which is when we actually look at the code and figure out how we're gonna change it to try and fix this problem.And as soon as it's ready, we message a channel with a PR, which is what you saw in Pete's demo. Just before, I want to stress that we're now just two minutes and 40 seconds after the incident first started. So that's pretty quick, I think you'll all agree with me.Now, we come into the final iteration of the investigation, and this is when we are going to update our report stating everything that we've found. That everything is going to be referencing Lisa's cautionary message about optimizations like this before and how they've gone wrong. It's going to explain why the code is wrong.It's interpreted error rates from Grafana, and it's confirmed that we have a fix ready. What we've done here is we've taken everything a human responder would do as part of responding to this incident, and we've encoded it into AI SRE. But unlike a human responder, AI SRE can exhaustively search anything that you'd ever want to look at and do it before a human has even properly woken up.It's all finished in just three minutes, 30 seconds, despite representing about an hour of wall-clock time as measured by all of the AI interactions and processing that we've run. That's how it works. But for anyone in the room who's been building AI systems — and I'd imagine that's probably many people — getting something working is probably just the start of your journey.By far, the most difficult work in the last year has been finding a way to build a system like this, and prove that it actually works, that it's reliable, and that when it's running on customer accounts, it's doing the right thing — and to catch it when it goes wrong. And that's why we've created an evaluation system that can grade AI SRE, and that system produces a scorecard that looks something like this.So this scorecard grades every aspect of the investigation. It allows us to debug each step as part of the process without ever needing to look directly at incident data, which for us is important because we don't want to be looking at our customer's data, but we do want to know how the system is performing.These scorecards tell us how we're performing in customer accounts. And when we change the system, we'll rerun it against historic incidents across all of our customer base and we'll use this evaluation system to quantify whether or not we've improved it, or if we've introduced regressions, so that we can avoid ending up harming the experience and ensure that we're improving it consistently.It's this tooling that allows us to safely upgrade to the latest frontier models as soon as they're out. And we typically do, often within a day of them becoming available. And it helps us tune our system for all of our customers instead of overly fixating on a handful. That's a look behind the scenes and it shows you how much that goes into powering AI.SRE. We've had to solve so many hard technical challenges as part of building a system like this — challenges that, honestly, the industry as a whole are still figuring out. Agentic systems are hard; multi-agent systems on large-scale datasets with ambiguous problems running with real-world time constraints are about as tough a challenge as it gets.But the great thing about AI SRE being so difficult to build is that it's forced us to solve some really hard AI problems in a general fashion, and that's produced a ton of tooling and a ton of innovations that we can now use to reimagine the entirety of the rest of our product. It's also, for example, how our chatbots feel as smart as they do, because all the work that we've done on the long-lived agents that run as part of the investigation process is exactly what we ended up rolling to the chat experience and why they can solve multi-step problems in a way that you may not have seen in other products before.I'm really proud of what we built here, but I'm even more excited about what happens when we take everything that we've learned from building AI SRE and apply it to the rest of the product. I'm now gonna hand over to Ed, who's gonna walk you through some of the first steps in that direction.Ed: The incident you saw from Pete was a classic example of being paged and jumping straight into investigating. But as you're probably all maybe a little bit too familiar, there are plenty of times when you're actually jumping into an incident midway through, and then you're scrambling to gather all the context you need to and figure out what you might wanna do to help.So this next section of the demo, we're gonna run through a few examples that show you what the incident.io platform looks like in those contexts now. Okay, perfect. Looks like we're back.Good to go. Awesome. We're good to go. Perfect. So for this next section of the demo, I'm actually gonna start off in my team page in incident.io. In the team page, I can see everything that's relevant to me in my team — things like incidents, alerts, escalations. I can also see who's on call and any incidents that are currently affecting my team right now.So in this case, I can actually see two incidents. This one down here is the incident that Pete showed you in that first part of the demo. So that's where I'm gonna head to first — maybe Pete's a little bit rusty nowadays, and could do with some support.Perfect. Cool. So the first thing I'm gonna do once I get to this incident is I'm actually just gonna jump straight to the investigation. The things that I wanna call out here is, one, you can see AI SRE is still working. That's because AI SRE is actually an ambient AI agent. It's constantly working behind the scenes to help you in every way that it can.So as new information is emerging — that's things like messages in Slack, alerts or changes in your telemetry — AI SRE is using those to inform new investigations and will continue working in the context of investigating the issue. Just as Pete was, it will share those new findings with you in Slack. In the context for me right now, where I'm just trying to get up to speed, this gives me a constantly up-to-date representation of what's going on in the incident.As I look down the page, I can see a summary of exactly that. So it's gonna tell me what's going on, what I think caused it, and what I can do about that next. From here, I can dig into more detail in lots of places, wherever I need to. So first off, I can look into next steps. For each next step, there's a more detailed overview of why might wanna do that next step, and exactly what I should do.And there's also useful links in here if I wanna jump out and get extra context. As I continue down the page, there are also all of the findings that AI SRE is assembling. These are artifacts that it's using to put together the hypotheses that you've just seen above at the top of the page.These are also visible in Slack as well. So if you look in the thread where we're posting findings, you can still see this detail in Slack too. What's really powerful here is I can see all of the breadcrumbs that AI SRE has assembled whilst it's thinking about the root cause. From here I can see, say, past incidents that are relevant, Slack messages that are relevant.Often there'll be code changes, or telemetry here as well. I can look into them and see as much detail as I need to, but also link directly out to the underlying citation as well. Just coming back up to the top of the page. And the final thing I wanna show you here is that I can actually just dig in straight to how AI SRE has been reasoning.So if I come here, I can see right at the top, this is the overview that you can see on the same page. But as I scroll down, you can see all of the steps that AI SRE is taking to build up its hypotheses. You can see it's been querying telemetry, looking for similar incidents, searching team messages.These are some of the processes that Lawrence has just shown you. And again, this provides me the detail I need to get up to speed. Perfect. So say, in this situation, it looks like Pete's Got it. We're in safe hands. So I'm actually gonna head back to my team page, and have a look at that other incident that's currently ongoing and affecting my team.Cool. the first thing I can see here is that Sam and Martha are actually already working on it. and they're on a call right now. So there's obviously, calls are super important whilst you're responding to incidents, but usually one of two things happens when you jump on a call. Either nobody remembers to take notes and then everything you discussed can be easily forgotten or somebody does, but then maybe that's taking up a lot of their time, but they could have been spent investigating and resolving the incident.We've solved that problem with Scribe, which is on the call with Sam, Martha right now. So Scribe is a note taker, which transcribes incident calls and summarizes notes, decisions, observations, actions in real time, and you can actually see that directly up on the screen here. So here's what they're currently discussing and some of the key actions or decisions that they've just made.So what this means in practice is I can get a quick overview of what's going on in the call without having to interrupt Sam. Martha. If I need more detail, I can actually just jump into the notes and I can see the transcript and a full summary and the key points that they've been discussing. right here as well.I'm sure you're all very familiar with that feeling, being on a crowded call where people are jumping in at different times. They all have different amounts of context. It can be pretty confusing and also super distracting when you're trying to get work done. But at this stage for me, as someone trying to get up to speed, what I really wanna ask is, Hey, based on what you're discussing right now, is there anything I can do to help?now, instead of jumping in the call and needing to ask that to Sam and Martha, whilst they're focused, I can actually just ask that right here in incident.io. So let's say, based on the recent discussion on the call, is there something I can help with?Just whilst that's thinking, I wanna emphasize that this is all based on that same underlying technology that Lawrence has just shown you. And it has incredibly rich context. So it knows about the call, it knows about everything in Slack, and everything that AI SRE has found too. You can see it. Thanks, I'm Peter.Just excuse that for a moment. it says, yes, it looks like the team does want my support in this particular situation. So what I'm gonna do here is actually, go say hello to Sam, Martha and see how I can help.Hey folks. You are live on stage at SEV0. I'm obviously a little bit busy right now, but how can I help?Sam: Ed, for the purposes of today's demo, it is incredibly convenient that you're here. ItEd: It's almost like we planned this here, Sam.Sam: Yeah, we're a bit busy here too. we were wondering would you be able to help us with sending comms out to the affected customers and update the incident?it'll give Martha and I a chance to get this fix out, and the incident's looking a bit sad and stale at this pointEd: for sure. Happy to help. before I do reach out to any customers, do we have a timeline on a fix that we can share with them?Martha: Yeah. Sam's just approved my clear, so I'm gonna go ahead and merge it.Should be out in the next 10 minutes if everything goes to plan.Ed: Awesome. sounds great. I'll leave you to it then. Cool. So now I can head back to that incident. the first thing that you're gonna see is that Scribe has already updated its notes here, with the stuff that we've been discussing, whilst I was on the call that was showing that I was on that call as well.So this is all happening, in real time. The other thing I wanna show you that Scribe is doing as we come down to the timeline. Is that it's picking out all of the key moments that are being discussed and putting those on the incident timeline automatically. you can imagine just how useful that's gonna be when it comes to writing your post-mortem later instead of having to look through raw transcripts or maybe have no notes at all and try and figure out what happened.You have all this information here by default. Okay, cool. As a next step, maybe I'll actually go and do those things to help out Sam and Martha. In this case, what I wanna do is create actions for all the points we discussed on the call and assign them to the right people. So I saw in the notes before that Sam and Martha were already discussing some actions they were taking, but it also sounded like I should help with comms.from here I just wanna make sure that's all tracked correctly. again, I just wanna emphasize this is able to pull information directly from the call. So everything Scribe is seeing you can access here as well. the other thing I'm noticing just whilst this is thinking, is that this name is not very helpful and I think we can probably do a little better.So yeah, this is not super clear and I think we can do better. So how about we say, can you suggest a better name for this incident? So as well as just being able to change the name here, it's actually able to manipulate essentially all of the attributes on your incident so it can update the incident for you.Write summaries as you've just seen, create actions as well with assignees. It's all natively integrated with our response product and incident.io. Okay. Yeah, that looks much clearer, so I can go ahead and accept that. In this case, I asked for a suggestion, so that's exactly what it came back with. It's also able to manipulate these things directly, just like it did with the actions there.Okay, cool. before I actually go ahead and reach out to the customers, I just wanna make sure I have a clear understanding of the impact of the incident so far. So let's say, which customers has this incident affected? Again here, it can be drawing from anywhere actually in the incident context. So maybe this wasn't discussed on the call, but it could have been discussed in Slack or maybe AI SRE found this and something it looked into.And there you go. It's able to identify the five customers that have been identified. From there, maybe I want to enrich it with even more information though, so I can do something like search Catalog to find the revenue impact for each of those customers. In this situation, this information actually isn't in the context of the incident.It's not in the call, it's not in the channel, but there's an agent here that can go look up those customers in our Catalog and then find any related attributes in Catalog — that works similarly here for revenue. But I can ask it more questions too: who are the CSMs for those customers?Obviously this sort of information is gonna really help me in this situation. It's gonna help me understand the total impact in terms of revenue, but also say knowing the CSMs will enable me when I do reach out to customers to give them the right point of contact to come back to if they have, if they have any questions.The way this is working under the hood is all of this information is synced from our Salesforce integration right into Catalog, and then is accessible right here by default. Cool. All of that looks good. Final thing I want to do — there's lots of useful information here — I just wanna make sure this is actually captured in a structured way on our incident.So we have it for later when it comes to things like analytics. So I'll say, can you update the Affected Customers field, please.There we go. As I do that, I'm just gonna switch straight over to properties and scroll down. And it should any moment be able to update those fields directly. So here you have all of those customers marked against the incident. You'll also see we have derived custom fields here as well.So the revenue impact, which we just discussed has also been summed and added to this incident. Perfect. So I think we're looking good here. at this point I'm gonna hand back over to Pete, who's gonna show you the next steps from here once we actually close this incident out and we're ready to review and learn from it.Pete: I guess you're still there, a little bit more about what happens, as Lawrence said, when you take some of the underlying technology and you start to bring it to other areas of the product, and that's what I'm excited to talk about for the last bit of this presentation. So the third thing that we're gonna show you, and so far, the fact that the only thing that's gone wrong has been literally the HDMI cable.It's very reassuring. Let's head over and see if we can continue the winning streak. So to call it out: a lot of work in postmortems — which is what we're gonna talk about now — goes into pulling together tons of information from different sources. Even within incident.io, Ed showed all the key moments from Scribe — that's great.But if I have to go through a ton of different tools and do endless copy pasting for hours and hours, to assimilate all of that into some kind of cohesive story and narrative, it's gonna take a ton of time and a ton of work. Inherently not a bad thing like postmortems are for learning. And I wouldn't for a second suggest that, we get to the end of an incident, we say generate a doc.That's the learning's done. we all know that's not the point. That's not, the reason we that we write them. But I think a platform like incident.io could do a ton more to help with potentially that first. gather all the information, do a first draft, give me something to riff on. We all, for many of us, we work better taking something that's mostly there and helping to make it really, good.And so that's what we've set out to do. And today we're trying to introduce a few fundamental changes to how postmortems work in the platform. Specifically around how do we write them, how do we get that first draft put together? How do we learn from them? How do we ask questions?Things like that. Even if you weren't necessarily in the incident. And how do we collaborate on them as a team, right? These are all things we care a lot about. So I pulled up a recent incident for my team here, and if I hop over into the post-mortem tab, we'll be presented with this Shiny orange button, that is desperate to be clicked, which is exactly what I'm gonna do.And then, flawlessly, this is gonna work. As you would expect by this point — just to belabor it — AI SRE is taking all of the context from the incident, except now it's looking at the instructions that we've put in our incident postmortem template, which is already a feature of... there we go.I didn't even have time for my patter. Team, you are too quick. but it's taking what we already have, which is a really good template, and we've given guidance for humans. And what it's done is take that and all the context and try to take, a first pass. I just wanna call out a few things that we're seeing on the, screen here.So firstly, this draft is not just text. It's actually managed to interpret a lot of the references — like timestamps, for example. This is the time it was reported at, and that's cool 'cause it means, rather than a Google Doc or Notion or something like that, we actually know what this timestamp is.It has meaning in the context of incident.io. And we also have things like durations, like we know that from the time it was reported to the time it was resolved was about one hour, 16 minutes. We know about users. So for example, Tom, I know who Tom is, obviously he's on my team, but in a large company, when I've been in organizations with hundreds of engineers, like I've read postmortems where it's referring to people and systems and roles that like, I don't even understand, I've never seen this before.If I go in here, I can see Tom was the incident lead, and he's from the response team. That gives me a ton of context. If I wanted to, I could jump in and go look at him, look him up in Catalog, find him in Slack, et cetera. And then, we obviously reference things like GitHub pull requests, and then other things we can do that I think are cool is, if I were to reference other incidents, for example — so if I were to do, INC-15126.I can see here, I can pull in past incidents and I see a nice little preview hover card. This is the kind of postmortem-specific editor experience that we are able to build as an incident response platform. It's just a notch up from the Notions and Google Docs that we typically use ourselves at the moment day to day, pending this being in production — well, it is in production. Other things that I wanna call out: I haven't done it here other than adding this, but to call it out — this is obviously an editor. I can say, Hey, SEV0. It's real. I think also things I can do are, I can change not just editing the text, but I can edit properties on my incident.How often have you written your postmortem, realized something was wrong, gone and changed it in some other system? Then I have to go back, update the document. Lists drift, timestamps drift, everything gets out of date because this is all inside the incident.io platform. If I just go down here and, let's see for a second, what can I do?I'm gonna pick on this one. So let's say, oh, actually we realized it was reported, an hour before we said it was, you saw like up here it was 11 o'clock. if I go update that timestamp, you see it changes to 10. Now that is not like wildly impressive, but I think just keeps everything really up to date.What I think is cool is everything updates. So all the durations that depended on that are now completely in sync. and so this is the, I think the future of what a great postmortem inside an end-to-end platform could look like. Other things that I wanna call out, at the bottom, it's not just a rendered text.If I keep scrolling down and go show you some other stuff, we've actually rendered the timeline live into the document. Obviously, it can decide what gets shown, what doesn't get shown. I can choose how it gets shown. I can decide to show a little bit more detail here if I want to do that. And it's also listed out, some follow ups here and shows the real time synchronous state of where these are at.Have they been done in Jira, in Linear? If you've ever listed out follow-ups in a document and then come back to it later to go, did we actually ever do that stuff? You don't have that question here. It's all live inside the document. Even though we're working inside issue trackers elsewhere. Ed showed a couple of things a minute ago, and in chat terms, these are all available in postmortem context as well.Obviously, in addition to all the things that Ed mentioned, it now also understands — in this context — the document, the content, the structure, everything about this. And so I can ask, let's kick off with a general question. Let's say, are there any follow-ups I've missed? I hope not.So that's like a very easy one. It's go look at all of the context you hold, look at the follow ups that we've already listed. have we captured anything, all follow ups, attract, no follow ups are listed or appear to be missing. Great stuff. I can also though ask more specific questions.So maybe I come in without a huge amount of context on, I don't know these customers, for example. So I can say, let's highlight these and let's go ask a SRE. which plans are these customers on? And I'll spell plans right just to. Give it a helping hand, and it can take just that selection and I can ask a question on just that bit of the postmortem and it will feed that into the query that it's making and it'll give me really precise, tailored responses.And again, this is not just like pulling it from Slack. This is gonna pull it out from catalog. It's gonna link the customer to our CRM data. It's gonna go figure out what plans they're on. And you can see here a lot of enterprise customers, probably not, such a great incident. so this is I think some really cool examples of what you can start to do when you bring the power of the AI platform into context.Even postmortems, right? There's a huge amount that we can, improve. The last things I wanna call out, so far it's, just been me. there's probably a lot of postmortems in my life that have just been me, if I'm honest. but. They're always better if you're doing 'em with friends.and this is no different. Obviously, the goal of a postmortem is not to do it on an individual basis, is to collaborate. It's to share the learnings with your team. It's to bring other people into the learning experience. And there's obviously some simple ways we can do that. I can say blocking updates critical to on-call schedules.I can say, I don't know. Let's grab Martha. She's already on demo mode. Martha, what does this mean? and obviously then it'll ping Martha and say peach commented. We've all used the document editor before. and that's cool. But what I think is really, cool is that, when Martha drops in and replies, she can turn and in the document and she can edit everything live.So we have built everything you've just seen entirely real time and collaborative, and any number of people on your team could pile in and edit this all at the same time. Really, familiar features, but I think it's really, cool to combine that with all of the rich details and all the rich features you just had.Martha could be writing this, someone else could be asking questions, someone else could be looking at the references and getting up to speed. And if we wanted to, and we are feeling particularly crazy, given that the rest of the demo seems to have come with our hitch, we could do something like, say team.If anyone does have that laptop open and you wanna pile in, feel free. and, everyone else could get involved as well. yeah. Awesome stuff. Postmortems like this are such a rich source of knowledge and information for organizations. They're honestly my favorite bit. I think literally one of the first times I met Chris at Monzo was nerding out in a debrief session.And I think our hope is that with these kind of changes, we are really starting to make this a platform where you can see your incident through from start to finish. And it goes without saying — I think Ed might have touched on it earlier — that everything you learn through your postmortem and write-ups, everything your team do, all the questions that they ask, all the answers they get, the comments, the extra bits that they're adding here — AI SRE will learn all of that too.The incident.io platform has that context now. So next time you have your incident, we can be even smarter. That is postmortems. Autumns.Cool. So that went surprisingly well. What's next? Lots of exciting upcoming product that you've seen there. And to call it out again, we've not fabricated all of this. This is real. You can go to — I think it's Showtime; Tom will kill me if I got that wrong — School Night.Goddammit. Yeah, you can tell I've been focusing on other things. So yeah, if you go to School Night, there's a couple of demonstrations set up and you can literally go and poke and prod and see the team walking through — admittedly, a much more contrived incident in a smaller space. But that was a real one that was in our production environment.Real code, real telemetry, real everything. For those of you already using incident.io, of which there are many in the room, most of what you saw that was specifically around AI SRE — that's available today. You can try that. Some of you are already trying it. We saw a bunch of quotes from that earlier today.so you should see if you haven't already all of that in your account very soon. The rest is coming very soon. We've got a few little bits that we wanna clean up and postmortems, but we're talking, weeks rather than months, and we're excited to get everything into your hands. Before I close out, I just wanted to, I just wanted to, let's, call it a vulnerable moment.Let's go back, four years. And I found this old screenshot where I was going like, what did it even look like when we started this thing? 'cause it's so, hard to tell the difference now. So this is a genuine screenshot of the first version of the product that Steve and Chris and I built around, I think it was my kitchen table back in the day.I did a few predefined actions in Slack. It was like a sort of glorified Slack bot with, I think we had one integration with status pages that let me post updates to the web, and a rudimentary web ui. I was really proud of this. the team, will probably laughing the heads off given what you've just seen they can do.and if you compare that to today, where we have an end-to-end AI native incident response platform, it's night and day. I'm so, proud of everything the team have built. You guys have absolutely crushed it. and we're only just getting started. We're four years in. We're barely going at this point.We have a long, way to go. but we're gonna get there as fast as we possibly can, just like we have for the last four years. And we're planning to get all of this into as many hands as possible as soon as possible. We're really, excited. I hope you guys have enjoyed seeing what we've been up to.I hope it was worth the wait. I'm glad the only thing that went wrong was the AV Zucker, Zuckerberg. And, yeah, if you wanna dig deeper, see us in School Night. Thank you very much.San Francisco 2025 Sessions

The evolution of incident management

Martin Smith, Principal Architect, SRE, Nvidia

Martin Smith, Principal Architect, SRE, Nvidia

View session

From error to insight: Human factors in incidents

Molly Struve, Staff Site Reliability Engineer, Netflix

Molly Struve, Staff Site Reliability Engineer, Netflix

View session

Our data disappeared and (almost) nobody noticed: Incident lessons learned

Michael Tweed, Principal Software Engineer, Skyscanner

Michael Tweed, Principal Software Engineer, Skyscanner

View session

Claude Code for SREs

Kushal Thakkar, Member of Technical Staff, Anthropic

Kushal Thakkar, Member of Technical Staff, Anthropic

View session

incident.io Product Showcase

Pete Hamilton, Co-founder and CTO, incident.io

Pete Hamilton, Co-founder and CTO, incident.io Lawrence Jones, Principal Engineer, incident.io

Lawrence Jones, Principal Engineer, incident.io Ed Dean, Product Manager, AI, incident.io

Ed Dean, Product Manager, AI, incident.io

View session

Boundary Cases: Technical and social challenges in cross-system debugging

Sara Hartse, Software Engineer, Render

Sara Hartse, Software Engineer, Render

View session

SEV me the trouble: Pre-incidents at Plaid

Derek Brown, Head of Security Engineering, Plaid

Derek Brown, Head of Security Engineering, Plaid

View session

Navigating disruption: Zendesk's migration journey to incident.io

Anna Roussanova, Engineering Manager, Zendesk

Anna Roussanova, Engineering Manager, Zendesk

View session

What Real Housewives taught me about postmortems

Paige Cruz, Principal Developer Advocate, Chronosphere

Paige Cruz, Principal Developer Advocate, Chronosphere

View session

Security without speed bumps: Making the secure path the easy path

Dennis Henry, Productivity Architect, Okta

Dennis Henry, Productivity Architect, Okta

View session